Writen by Yan Cescon Haeffner, Data Engineering, in 13/09/2021

5 minutes of reading

We need to talk about data build tool — From ETL to DBT

This is the second part of a series of posts about Data Build Tool (DBT) and here we’re going to understand the impacts of it for ETL pipelines.

In the first post of this series, We need to talk about data build tool, we learned about the reasons that would make dbt fit nicely into data pipelines. So today, we’ll be going through the process of moving from an ETL architecture to a DBT driven one, from the perspective of a data team.

From the domains of ETL

Since there are a lot of ways to define a pipeline to deal with the extract, transformation and load of data to a data warehouse, we first need to define the nature and boundries of the solution we are trying to adapt to DBT.

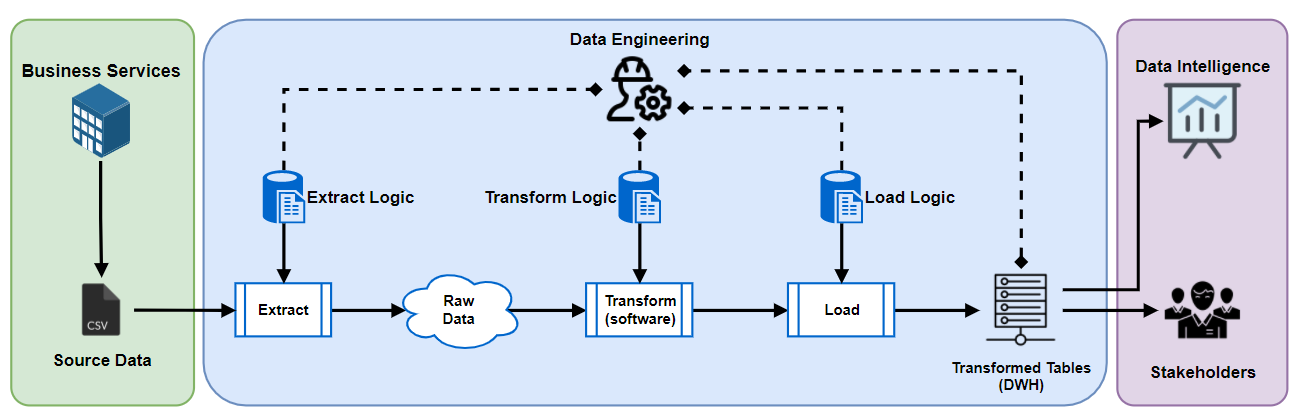

Let’s assume that we have the following pipeline being executed on a schedule:

Example of ETL pipeline team ownership

By just looking at it, we can tell that a big bottleneck here is the business knowledge required from the Data Engineering team to build and maintain this type of pipeline. It occurs because the transformation happens before the loading process, which bounds the engineering team from the beginning to the end of the data delivery pipeline.

Also, another problem that usually happens on ETL pipelines is that is kind of hard to maintain track of the data as it moves through it, since it would only centralize data on the loading process by inserting everything into the data warehouse.

So, how would you move from an existing ETL pipeline to, let’s say, something else?

By the ELT approach

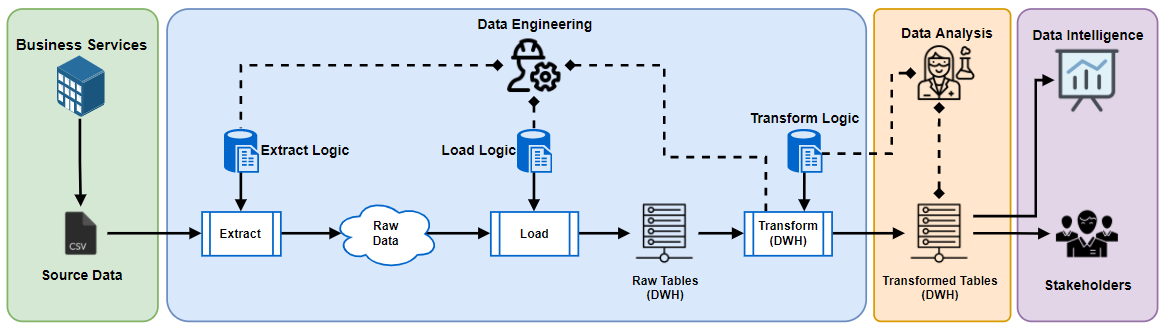

The ELT pipeline aims to solve the problem of chaining data engineers to heavy business logic concepts and lack of data trackability. A common variation of the ETL pipeline to a ELT approach looks like the following image:

Example of ELT pipeline team ownership

Now we can see an isolated ownership for the data analysis team, the ones responsible for getting deep into the business rules and transforming raw data into readable information for stakeholders (or end-point data tools).

But, as you may have guessed, there are still some transformation responsabilities to the data engineering team, and even though that responsability is way less complex than in the ETL counterpart, it still may end up bottlenecking your data pipeline as your business complexicity grows… And that is because the data engineering team is still responsible to implementing the transformation logic into the pipeline, which means that they still need to know how to properly join things up and manage things down.

Well, it really seems that we just took a data engineer and inserted some business logic (and SQL files) in there, right? There must be something that could be done in order to isolate that transformation layer even more, but what is it?!

To the realm of DBT

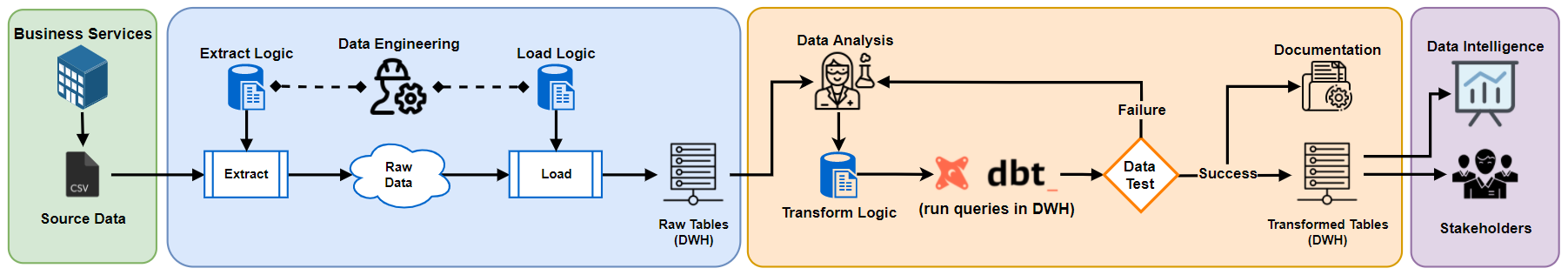

The big leap of productivity for the ELT pipeline arrives as soon as an analytics tool is implemented in the workflow, like dbt (you should really check it out here if you don’t know what I’m talking about).

The difference is so flashy that it can actually be described by one single image:

Notice how the whole transformation process now becomes something apart of the data engineering team and revolves all around the data analysis one.

It means that, by just calling some dbt runs from an orchestration tool, we are able to implement the transformation logic into the rest of the pipeline and still be able to manage its execution, add some failure/success callbacks if needed and hold every raw data we have been extracting so far.

Also, some really nice by-products from this workflow: an automated documentation (that is generated from the dbt project itself) and a very useful data validation step. These by-products generate a lot more value for the data team as a whole since now we have our data lineage documentated and we can assure to our data intelligence end that everything has been tested as well (at least with the most updated business logic), neat!

In short, ELT pipelines with dbt allows data teams to break down the increasingly complexicity of data extraction, loading and processing tasks while increasing their team productivity, sanity and data ownership!

But it is all fun and games until you need to implement this on your production environment, right? Don’t worry, we got you covered.

And even further beyond

Now that we talked about dbt and the benefits of adding it to your ELT data pipeline, we should finally be able to discuss about how to implement it… But since the Fishtown Analytics team (and community) already made an awesome job to teach you on how to create your first dbt project, I will reserve the next talk to something a little bit edgy…

On the next post, and the last part of this series about data build tool, we will get some hands-on implementation of a dbt project into an orchestration tool.

Spoiler alert: we will use Airflow to orchestrate a very similar ELT pipeline to the one we discussed here (using the starter dbt tutorial from Fishtown Analytics)!

Don’t know what Airflow is? Please make sure to check out this post in order to learn the basics then!

See you on the next post!

Opening image credits: Jan Tinneberg on Unsplash